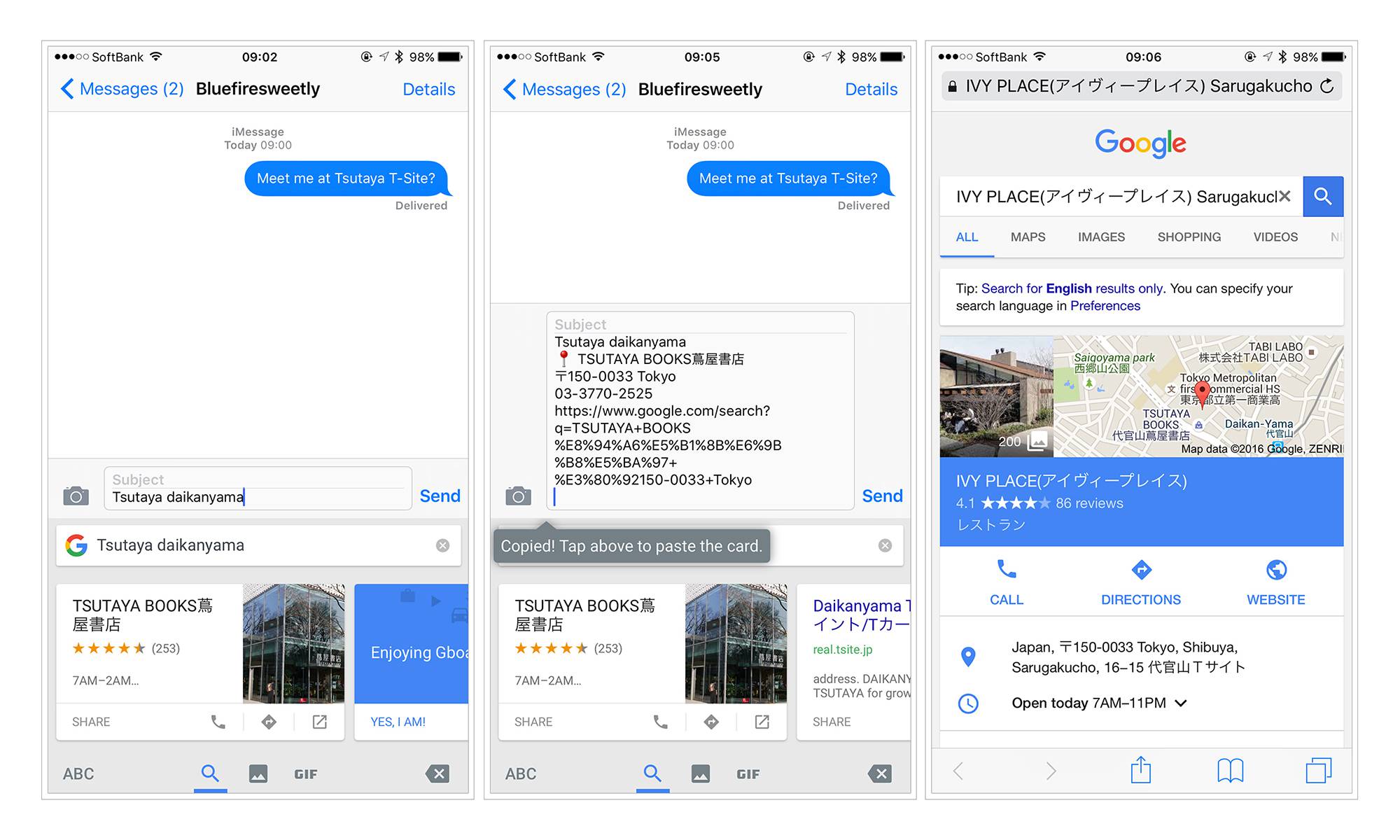

Google recently released GBoard - the first third party iOS keyboard we’ve ever found intriguing enough to download and install on our phones. The key value proposition centres around saving the considerable (in aggregate) time the average phone user spends switching around researching, copying and pasting information between various apps multiple times on a daily basis — the featured use case centres around the act of setting up a place to meet for dinner with a friend via text.

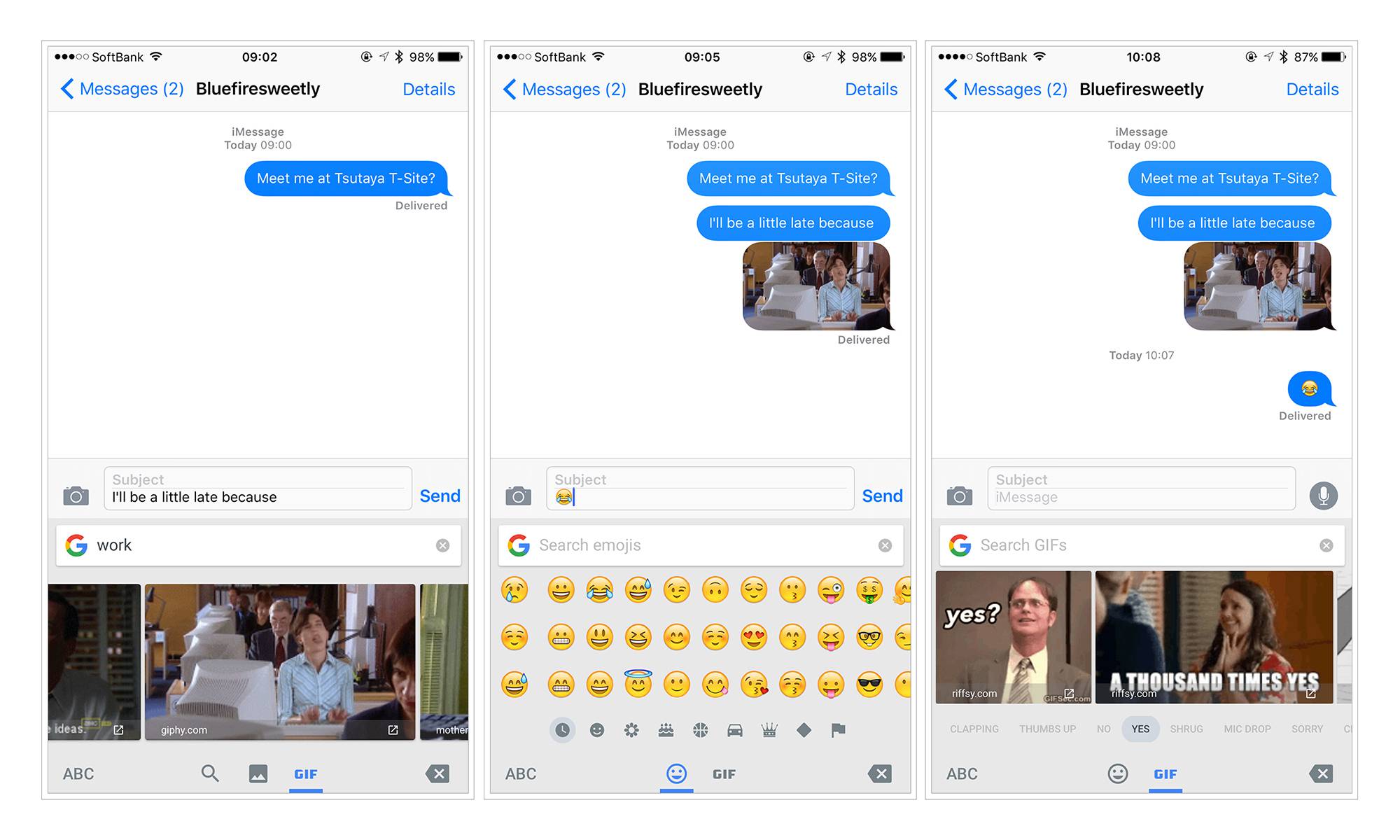

The keyboard has a few other tricks up its sleeve as well - swipe/glide typing, emoji auto-suggestion and (perhaps most amusingly but also surprisingly useful) the ability to search for gifs and images directly from within the keyboard.

First Impressions: The Good

- Glide typing is nice, though probably best reserved for occasional use only on longer words as it tends to get frustratingly tripped up on shorter words.

- The use of Roboto is actually fairly harmonious with the default San Francisco system font of iOS, and we don’t mind the overall lightness of the font weight (thought others may have differing opinions)

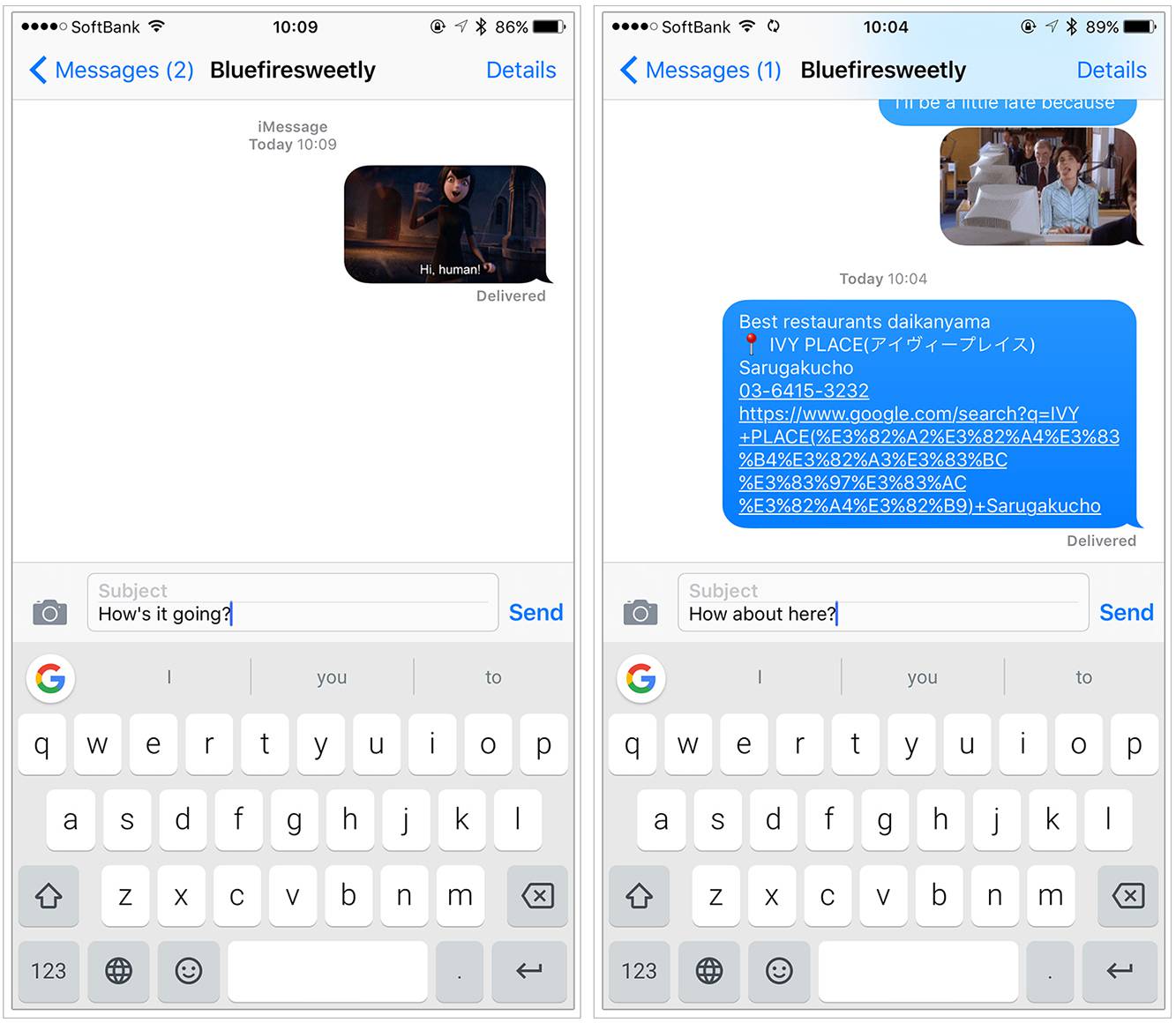

- The fundamental search functionality is an awesome way to leverage the Google Now style cards that provide bits of contextual information - more often than not the first two or three cards show from the search were exactly what we were looking for (business location map link, restaurant review, etc.)

- The only thing that would be better would be if simply tapping on the card would automatically insert the information into the text space rather than requiring a specific manual tap/copy/paste action. (perhaps this was done to prevent mis-tapping but still, there are better lower-friction solutions around this)

- The ability search gifs or images directly from the keyboard is fabulous and demonstrates a great understanding of how people text beyond simple words. Especially looking at it from the Asian perspective, where every mainstream OTT messaging app has stamps/animated images/video as part of the basic functionality and is as essential a part of the context of daily communication as text itself, this dramatically enhances the the usability of the basic Apple text/iMessage product.

- As an aside, it also neatly sidesteps the UX horror that is the unsorted, unmanageable camera roll — gone are the days of scrolling through tens of thousands of unsorted square thumb nail images in the camera roll trying to find the perfect image/gif you downloaded some weeks ago knowing how useful it would be for a text (all the while type to respond while the context is still relevant and witty — after all, timing is everything when it comes to reaction gifs). Now the perfect gif is just a grumpy cat or challenge accepted search and tap away.

- Sliding the thumb across the space bar moves the insertion point appropriately - much more accessible than the force touch required (and supported only by newer iPhones) in native iOS.

- Having the emoji panel being just another panel in the keyboard - rather than requiring a clunky separate keyboard like the native iOS implementation is fabulous. * This implementation allows full access to all the iOS emoji in English, without requiring manual laborious and clunky switching between various keyboards - it’s simply a tap - swipe - and tap which significantly minimises the interruption to the writing flow compared to the default emoji implementation. * *

- Linking copy/pasted locations from the keyboard to the much superior Google Maps over the crappy Apple Maps may be a self-interested choice on Google’s part, but also provides a much better experience for end users. We hate anything that uses Apple Maps by default - we hate Apple Maps so much we will manually launch Google Maps and copy/paste addresses in as a workaround.

* We actually removed the default iPhone emoji keyboard from our phones a long time ago because of how annoying the implementation was for bilingual users (see below). The cost, of course, was the loss of any kind of easy access to the default iOS emoji - since most of our communication occurs via OTT messenger apps like LINE/WeChat (which have their own, far superior set of emoji/stamp input methods) this was was a bearable problem, but it also meant that we hated using Apple iMessage/text because it was like typing with one hand tied behind our back (emoji/stamps are a crucial part of mainstream communication in Asia, and it’s hard to communicate with just text only)

For some of the more commonly used emoji, there are a couple of workarounds - in Japanese input mode, sometimes emoji are auto-populated as suggestions in response to certain input words (in Japanese), so often this could be a shortcut to trigger emoji (and coincidentally, works more reliably than the Gboard emoji suggestion). For English, this same functionality can be hack-ily approximated by judicious use of the “Auto-replace” feature in the keyboard settings and creating appropriate mappings.

* * Having the default implementation of the emoji be a separate keyboard might be less of a problem for people who only ever use a single input method (English, for example) as tapping the global key would simply toggle between the standard keyboard and emoji keyboard. But for those who require two different language keyboards in their daily life (such as Japanese and English), Apple’s default implementation introduces a third, almost always useless keyboard between the two “normal” keyboards which required you to press a the keyboard switch key three times to switch between Japanese/English (to skip the emoji keyboard) rather than just tapping once (as would be the case if you only have two keyboards) . Given how often anyone in Japan needs to switch between English and Japanese input, this proved to be a huge time waster and thus we removed it.

First Impressions: The Less Good

- The switch to allow “full access to Search” was confusing in the initial setup/walkthrough screens. The screenshots use Material Design-style selection switches but this initially led us to think that this was a setting within the GBoard app (other Google iOS apps also use Material Design-style switches) when in fact it’s a setting buried three-levels deep within the native iOS third-party keyboard preference panel (and which obviously uses the iOS style toggle switches). Took us a few minutes of frustrating switching back and forth to figure this out.

- The predictive emoji functionality** * in theory sounds like a great idea. Asian OTT messenger apps such as Line/WeChat/Kakaotalk have been doing this for ages now and it’s super useful for several reasons:

- It enables up far more options for accessing emoji/stamps -

- It’s far faster to access - you can literally just start typing a word and then tap, rather than laboriously swapping keyboards and then swiping through countless panels of tiny emoji gifs searching for the correct one

- It’s delightful - each time we discover a new word that triggers a stamp/emoji it’s like a little hidden easter egg — to say nothing of the fact that it also intriguingly elucidates what some of the more obscure stamps/emoji are supposed to represent - kind of a small aha! moment every once in a while. Basically, it’s super duper fun to discover these things whilst doing other tasks.

- Unfortunately Gboard’s implementation of emoji prediction seemed rather flakey when we tried it out - often typing things that we thought should trigger emoji (i.e. key or dancer which were used as examples in the release blog post) would do nothing; other times an emoji would not appear in the suggestions box the first time for a certain word (say, monkey), then appear the second or third time, then fail to appear at other times.

- It basically made it unreliable enough that it’s dubious we would ever rely on it as a feature. Emoji prediction is not the same as the prediction of normal words - if you want me to believe that I can type a certain word to get a emoji, then reliability is the absolute key. The user needs to know that each and every time you type this word this emoji/series of emoji will appear. Otherwise, why bother?

- My guess is that the reason for this inconsistency is that the emoji auto prediction uses the same UI space as normal word prediction and thus gets treated the same way (i.e. it varies what it shows you as a suggestion based on context/your past input). A better solution might be to take a page from the Asian OTT messenger apps and give it its own dedicated emoji/stamp prediction overlay that shows each and every time you type a trigger word, and then seamlessly clears away when the user spaces on to the next word without selecting an emoji.

* * * i.e. basically type a word like happy and not only the word happy would show up but also various smiling face emoji.

Conclusion

It’s still too early to tell how this keyboard will stand the test of time, but for the foreseeable future, it will remain as the default English input mode in our phone - and recipients of our iMessages can look forward to many more nyancats and grumpy cat images and gifs in our daily conversation…